This series is about the kind of assumption that sticks around for 50 years — and what happens when someone finally proves it wrong.

Open-addressing hash tables have long been capped by a log-bound on probe complexity. Everyone thought you needed to reorder elements to beat it. Turns out, you don’t. Just stop being greedy.

These posts explore the algorithms that cracked the bound, the mental models they upended, and the Rust code that brings it all to life.

Like the best breakthroughs, this one’s simple in hindsight — and wildly useful once you see it.

Why this matters

Hash tables aren’t just data structures — they’re infrastructure. They show up in databases, caches, compilers, memory allocators, network switches, and machine learning pipelines.

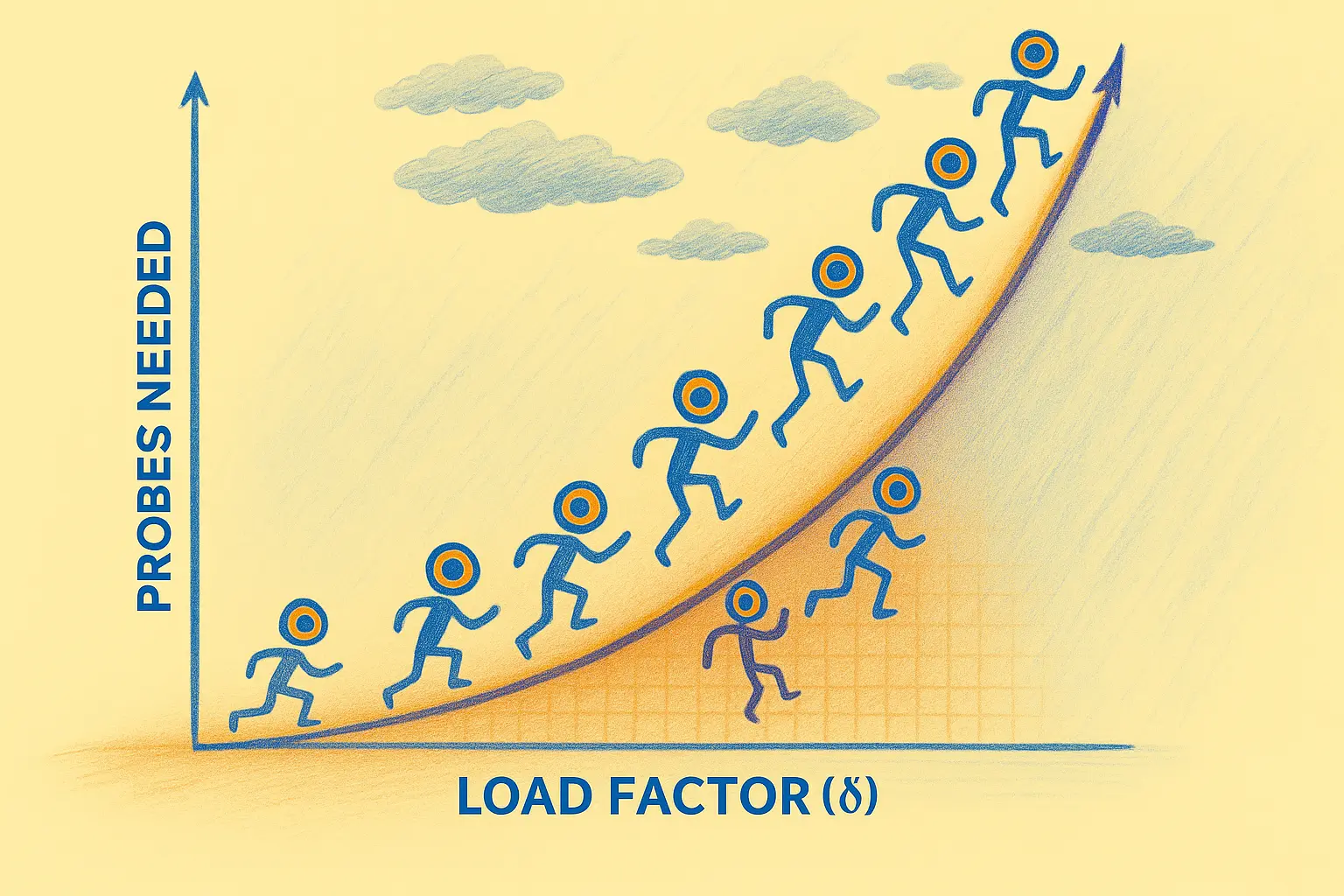

And for decades, we’ve built around a supposedly hard limit: the probe complexity of greedy open addressing grows as $\Theta(\log(1/\delta))$, where $\delta$ is the load factor.

In 2024, the paper Optimal Bounds for Open Addressing Without Reordering by Anshu, Dey, and Jayaram showed this bound can be beaten — without reordering and without cheating. By avoiding greedy insertion and spreading keys across multiple candidate slots, they achieved both sub-logarithmic expected probes and improved worst-case guarantees.

Faster, non-greedy insertions let us build tighter, leaner systems under high load. That means less overprovisioning, fewer cache misses, and smarter memory use — all without the complexity of reordering.

More than that, it’s a reminder that some of the biggest wins in systems design come not from more code, but from fewer assumptions.